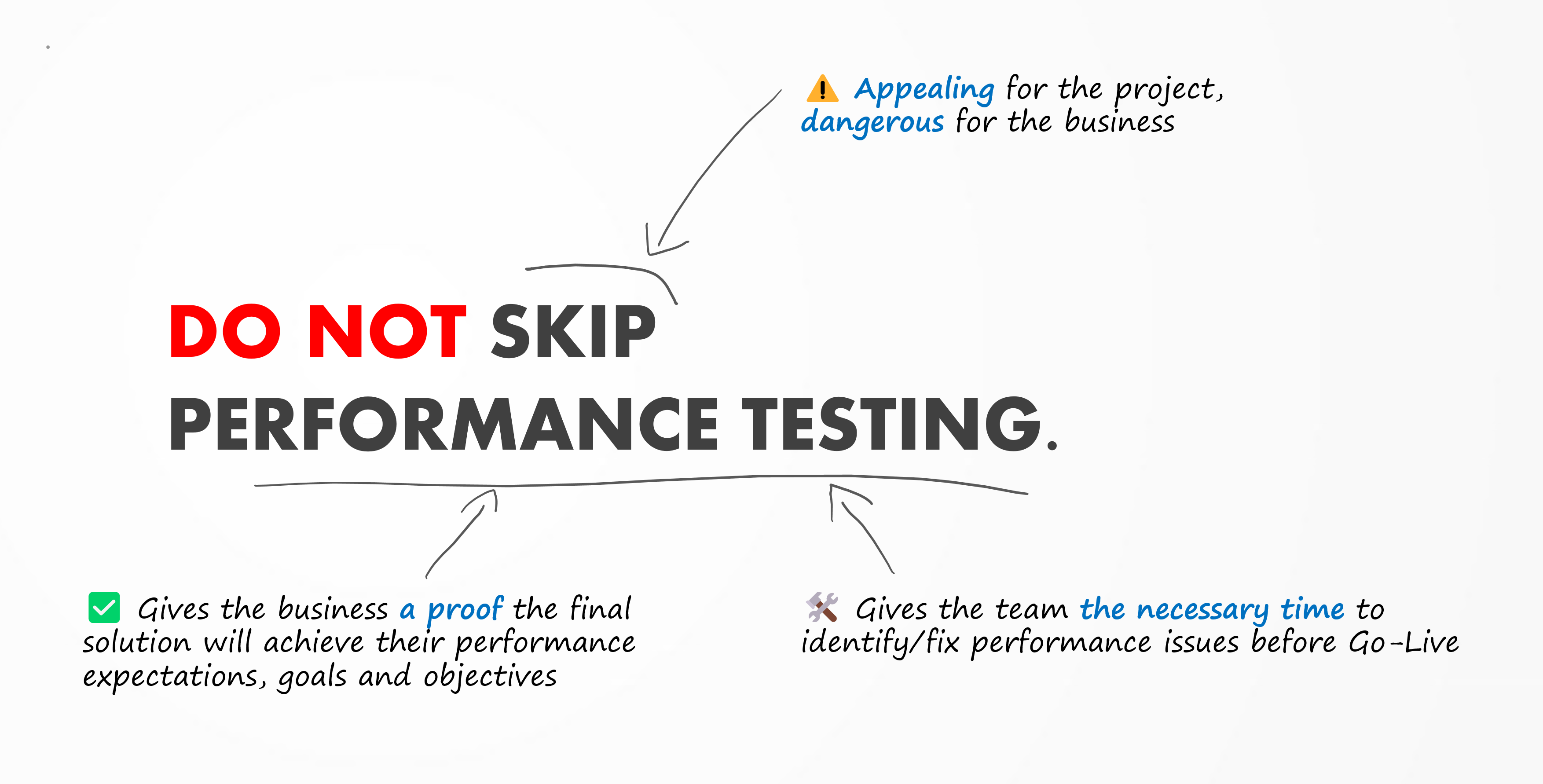

I’ll get straight to the point with this visual:

Skipping performance testing is appealing on paper but high-risk to both the business and the project team.

Skipping performance testing really sounds like a good idea, right?

It essentially removes from the project plan the key work component of validating the Dynamics 365 solution performance against the business expectations, goals and objectives.

It seems appealing from a project management perspective:

-

No performance testing preparation or iteration activities

-

No time “wasted”

-

No resources (team, environment, licenses, or money) “wasted”

“Wasted” because: what’s the value of performance tests if we already trust the solution is performant?

This is the central question: how do you know your solution is performant?

Assumptions and consequences

Over our past engagements, several implementation teams made dangerous assumptions about solution performance. Assumptions like:

-

We don’t have objectives and goals. It will be fine if user acceptance testing passes.

-

The platform is performant. Hence what the team builds on top will also be performant.

-

The project team is experienced and knows its stuff. The end product will perform.

-

We can always resolve issues after go-live. We first need to go live.

-

We are only adding a few users, so this will not have an impact.

Such misconceptions have real consequences. They maintain unclear performance targets, they prevent the team from growing in this area, and more importantly, they foster performance issues after go live.

As a reminder, the impact of a performance issue is either a slowdown or an outage. With an order-to-cash process, for example, the slowdown (“best” case) would result in fewer orders in, and the outage (worst case) could mean no new orders until the problem is mitigated.

Clear these faulty assumptions from the team’s thinking to allow a thorough performance testing strategy. This will save you from stressful crises after go-live due to business slowdowns and disruptions.

How to properly validate the solution

In most projects, it happens solely through user acceptance testing (UAT), a customer test phase that confirms the solution is fit-for-purpose (it does what my business needs).

However, this is not enough to validate the solution.

What is the value of a process that’s fit-for-purpose if it breaks under real-life conditions or if it slows down the business?

In such cases, performance considerations are neither discussed nor incorporated in the validation plan to sign off the final solution.

Keep in mind that performance testing is a customer test phase, and it should not be omitted. Using the performance test results in the exit criteria is critical to sign off the solution with confidence.

Shaping your performance testing strategy

If the topic is new to you, it can look like a daunting task, representing more work than value, especially when:

-

Business performance goals and objectives are not yet clear.

-

The performance test phase and budget were not planned early.

-

The team is not experienced with the activities and tools.

The Implementation Guide is your starting point to understanding the basics of a performing solution beyond infrastructure.

To go further, FastTrack presented a series of Tech Talks to help define and execute the performance testing strategy.

The six parts will help you thoroughly prepare and efficiently deliver the iterations needed to ensure the final solution achieves the business goals and objectives.

With and without

With performance testing:

-

The project team identifies and resolves high business impact performance issues early (meaning before go-live)

-

The business validates a solution that meets its performance goals and objectives based on data (the test results) and not based on sentiment (the instinctive trust that everything will be fine).

-

Hopefully, the hypercare phase is calm as most performance problems were dealt with.

Without performance testing:

-

The business uncovers the performance issues live as major process slowdowns and outages are reported, with negative impacts on the solution adoption and team morale.

-

The project team handles performance escalations (often in waves) reactively as they get escalated by the business, creating a stressful and inadequate environment for resolution.

-

More performance problems appear later as the solution starts to scale (for example, more users on the system, more transactions processed, or mixed workloads occur in a day in the life fashion). The result is that the resolution becomes even more difficult (for example, the project team is disbanded or fewer team members are available).

Performance issues are more complex and lengthier to mitigate after go-live. The required team effort to manage the performance escalations on top of the other activities is significant, and the additional fact that the business suffers increases the stress level across the teams.

Closing

Let me repeat it: no matter how appealing it sounds, do not skip performance testing.

-

As a partner, encourage and support the customer to adopt an efficient performance test strategy, considering similar conditions to the production environment.

-

As a customer, invest enough resources to execute meaningful performance tests to validate the achievement of your business goals and objectives.

I have seen unfortunate cases where minimum performance testing could have saved time, money, and team morale:

-

The business was slowed down as the final solution didn’t scale with peak workloads, and the heavy functional redesign took several months, during which the business compensated with additional people.

-

A wave of basic performance issues overwhelmed the hypercare team, which didn’t have the capacity to resolve them promptly, leading to poor user adoption.

What do you think? Would you close your eyes and trust that the solution will be performant?